Bringing Programmability to Data Storage

How blob storage on Walrus is handled, from write to read, storage to management.

- Walrus, a next-generation decentralized storage protocol, addresses the limitations of centralized cloud storage, such as high costs, single points of failure, and the risk of censorship or privacy violations, while improving on other decentralized storage systems by introducing programmability and increasing performance.

- Blob (Binary Large Object) storage, used to store binary data, can be used to store traditionally hard-to-accommodate unstructured data. Blob storage systems optimize for high durability, availability, and scalability for unstructured data.

- On Walrus, blobs and storage resources can be represented as objects, making them immediately available as resources in MoveVM smart contracts on Sui. As a core innovation, Walrus’ tokenization of data and storage space empowers developers to automate renewals, build data-focused decentralized apps, and innovate with accessible onchain data.

- The lifecycle of a blob on Walrus—writing, storing, reading, and managing—is optimized for decentralization and resilience. This process uses Sui as a secure control plane for metadata and to publish an onchain Proof-of-Availability (PoA) certificate, ensuring the blob is successfully stored.

- Walrus is chain-agnostic for builders, who can use a range of developer tools and SDKs to bring data from other blockchain ecosystems like Solana and Ethereum. Developers can explore a curated list of tools and infrastructure projects to start building with Walrus today.

Walrus, a next-generation decentralized storage protocol, addresses the limitations of both centralized cloud storage and decentralized storage systems through a protocol that’s performant, highly resilient, and cost-effective. Walrus is a Binary Large Object (blob) storage protocol that allows builders to store, read, manage, and program large data and media files—like video, images, and PDFs—while Walrus’ decentralized architecture ensures security, data availability, and scalability.

One of Walrus’ core innovations is how the protocol makes data and storage capacity available as programmable onchain resources. The lifecycle of a blob on Walrus is managed through interactions with Sui—from initial registration and space acquisition, through encoding and distribution, to node storage and generating an onchain Proof-of-Availability (PoA) certificate—so that Walrus, using Sui as its secure control plane, can remain specialized for efficient and secure blob data storage and retrieval.

This integration creates a powerful programmable data platform. Blobs and storage resources can be represented as objects, making them immediately available as resources in MoveVM smart contracts on Sui. This innovation transforms data storage from a simple utility into a programmable resource, allowing developers to:

- Treat storage space as something that’s ownable, transferable, and tradeable through smart contracts

- Automate storage management, such as by programming periodic renewals

- Build new kinds of data-centric applications and create dynamic interactions between onchain and offchain data

Beyond its use of Sui as a control plane, Walrus itself is chain-agnostic for builders. Developers can bring data from other blockchain ecosystems, including Solana and Ethereum, to Walrus for storage using a range of developer tools and SDKs. You can use Walrus in combination with your preferred development environment and smart contract languages to tailor a custom, decentralized data stack. Builders can also explore Walrus’ growing ecosystem of specialized, integrated services, like Seal, which can be used to bring decentralized encryption and secrets management to Walrus’ blob storage.

Understanding blob storage

In the data world, “blob” is an acronym for Binary Large Object, used for storing binary data (data that can contain non-printable characters as well as arbitrary bit patterns). With blob storage, data is managed as objects that each comprise the data itself, its metadata, and a unique identifier. Blob storage systems are generally optimized for high durability, availability, and scalability for unstructured data.

Unlike structured data systems that require fixed schemas (like tables), or semi-structured data formats (like JSON), blob storage doesn’t enforce a set organizational format. Instead, blobs are usually treated as collections of bytes, which makes them suitable for storing a wide variety of data types—even traditionally hard-to-accommodate unstructured data, like word documents, images, or audio and video files. Blob adaptability further extends beyond typical structured and unstructured data to specialized uses, including blockchain data availability, ZK-proofs, cryptographic artifacts, and even application source code.

The internet’s vast proliferation of unstructured digital information has fueled an ever-growing demand for blob storage, which offers key benefits for storing unstructured data, like scalability, efficiency, and accessibility. In parallel, the demand for blob storage has driven the widespread adoption of massive centralized blob storage solutions—generally offered by cloud service providers, like Amazon S3—leaving the internet’s data vulnerable to centralized outages and failed retrieval.

A desire for greater data sovereignty and resilience is a primary driver for decentralized blob storage. As a decentralized blob storage protocol, Walrus can deliver blob storage within a trust-minimized, censorship-resistant framework, addressing the inherent limitations of centralized control, such as single points of failure and potential for data takedowns.

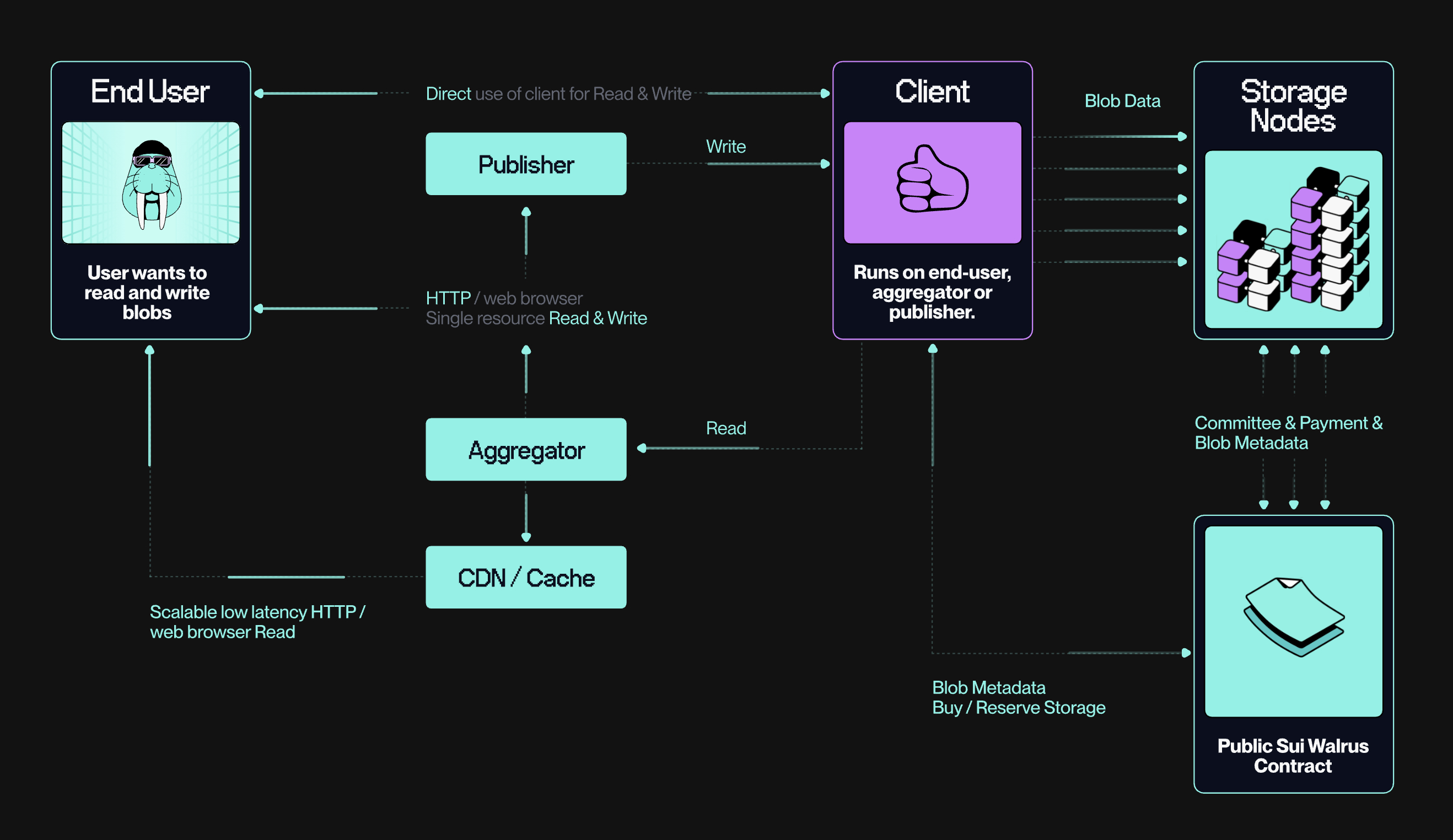

In Walrus, the client orchestrates the data flow

The client acts as the primary interface for users or applications to interact with the Walrus network. The client software is responsible for initiating the blob lifecycle by communicating both with the storage nodes that will hold the data slivers and with Sui, which handles the crucial metadata and contractual aspects of storage.

Beyond the core client-node-Sui interactions,Walrus can integrate with a broader set of services that enhance data accessibility and performance. Publishers or applications might leverage Content Delivery Networks (CDNs) or caching layers that work in conjunction with Walrus and the client to provide read/write services and publish data to the web.

Understanding the blob's fundamental lifecycle—managed by the client and validated on Sui—helps to build a deeper understanding of Walrus’ core value and programmability. But it’s important to note that Walrus’ practical utility and performance can be enhanced by surrounding infrastructures, all of which ultimately relies on the core guarantees provided by the Walrus protocol.

The lifecycle of a blob on Walrus

The lifecycle of a blob within the Walrus protocol is a structured process integrated with Sui. While the Walrus protocol is specialized for the efficient and secure storage and retrieval of blob data, it works in tandem with Sui as its secure control plane. Sui manages Walrus’ metadata, proof-of-availability, and enables the programmability of stored data.

The blob lifecycle can be broadly categorized into four main phases: writing,storing/maintaining, reading/retrieving, and managing.

Phase 1: Writing a blob

The process of introducing new data to Walrus is orchestrated by the client software, in coordination with Walrus’ committee of decentralized storage nodes and Sui.

The storage nodes are responsible for the physical storage of blob data. However, they do not store entire blobs directly. Instead, blobs are encoded into smaller, redundant pieces called "slivers." Each storage node in the committee holds a collection of these slivers from various blobs.

Acquiring space on Sui:

- The client software starts the process by interacting with the Public Sui Walrus Contract to acquire a “storage resource,” which is a reservation for a specific amount of storage space over a defined duration of time. These storage resources are represented as objects on Sui, making the storage resource accessible through smart contracts and enabling builders to treat storage space as something that’s programmable, ownable, transferable, and tradeable.

- The client submits a series of transactions to secure this storage resource and to register the blob, providing the blob’s size and registering a commitment hash to its content.

Encoding with RedStuff and sliver generation:

- Once the storage resource is secured, the client software encodes the blob using Walrus’ RedStuff algorithm (a key technical innovation based on advanced erasure coding).

- This process transforms the original blob into a set of primary and secondary slivers, which introduce the redundancy necessary to ensure fault tolerance and efficient recovery.

Distribution to storage nodes:

- The client then distributes these generated sliver pairs to the storage nodes that are currently active in the Walrus committee. Each node receives its unique pair of primary and secondary slivers for that blob.

Obtaining and publishing the Proof-of-Availability (PoA) certificate:

- After distributing the slivers, the client listens for acknowledgments from the storage nodes. Each acknowledgment is a signed message from a storage node confirming receipt and acceptance of its assigned slivers.

- The client must collect these acknowledgments from a baseline 2/3 quorum of nodes (this quorum is defined at the protocol level, based on the network’s Byzantine tolerance). The collection of signed acknowledgements forms a "write certificate."

- The client then publishes this write certificate on Sui. This onchain publication acts as the official Proof-of-Availability (PoA) certificate for the blob, immutably recording that the blob has been successfully stored and signalling the contractual obligation of the participating storage nodes to maintain those slivers for the duration specified in the storage resource. This process is officially completed once the PoA is fully confirmed on Sui.

Phase 2: Storing and maintaining a blob

With the PoA certificate established, the responsibility for the blob shifts to the Walrus storage network.

Storage node responsibilities:

- Each storage node that acknowledges a sliver of the blob is obligated to maintain its availability. This includes ensuring the sliver is retrievable, and participating in recovery processes using RedStuff's self-healing capabilities if other parts of the blob's encoded data are lost, or if a peer node needs to reconstruct its own sliver.

- The amount of slivers each storage node is assigned to store is proportional to the amount of stake they have delegated from token holders. The more slivers a storage node is assigned to store, the more fees it can receive for storing that data. Delegators receive a percentage of the storage fees based on the proportion of WAL staked.

Epoch transitions and committee reconfiguration:

- The committee of active storage nodes can change at each epoch transition if it rises above, or falls below, the required amount of stake to remain in the active committee.

- The protocol includes a multi-stage epoch change mechanism to manage these transitions smoothly, ensuring that all stored blobs remain continuously available even as individual nodes join or leave the network’s active committee.

Phase 3: Reading and retrieving a blob

When the client software needs to access a blob stored on Walrus, the following steps take place.

Client request and metadata retrieval:

- The client initiates a request for the blob using its unique blob ID. The first step is to retrieve the blob's metadata from Sui. The metadata, stored as part of the blob's Sui object, includes essential information such as the commitments (hashes) for each of the blob's slivers.

Fetching slivers from storage nodes:

- With the sliver commitments in-hand, the client software requests the actual slivers of data from the storage nodes in the committee.

- The client collects responses from multiple nodes, verifying each sliver it receives against its corresponding commitment hash from the metadata to ensure the data’s integrity.

- The client waits until it has met a 1/3 quorum of correct secondary slivers. As opposed to the 2/3 quorum of nodes required to write, this lower read quorum makes reads on Walrus extremely resilient. Blobs can be recovered in all cases even if up to one-third of storage nodes are unavailable, and can be recovered after synchronization is complete even if up to two-thirds of storage nodes are unavailable.

Blob reconstruction using RedStuff:

- Once the client software receives the required quorum of valid slivers, it uses the RedStuff decoding algorithm to reconstruct the original blob. RedStuff checks that a sufficient quorum has been met for slivers.

- As a final verification step, the client typically re-encodes the reconstructed blob and computes its blob ID hash. If this re-computed ID matches the original requested blob ID, the blob is considered consistent and valid. Otherwise, an inconsistency is flagged.

Phase 4: Managing stored blobs

Once a blob is stored, its lifecycle can be renewed or deleted.

Blob renewals and payments (extending storage):

- Users can extend the storage duration of their blobs by paying the storage price for the additional time desired, up to a system-defined maximum period (currently two years).

- The transaction updates the associated storage resource object on Sui, prolonging the nodes' obligation to store the data.

- This allows for effectively indefinite storage lifetimes through periodic renewals, which can be programmed via smart contract.

Deleting blobs/disassociation:

- The Sui objects that represent stored blobs can be deleted. While the hash of the data storage is immutable, functionally deleting a blob is made possible by disassociating the blob ID from its underlying Sui storage resource object.

- Once disassociated, the storage resource becomes free, and that storage space can be reassociated with a new blob or traded on the secondary market.

- If a client uploads inconsistently encoded slivers (as proven onchain by a quorum of nodes), the network may effectively treat that blob's data as inaccessible or "deleted" by refusing to serve its slivers.

Get started with Walrus blobs—and beyond

Walrus provides a decentralized alternative to traditional cloud storage, with high availability and integrity for blobs at scale. It also improves on decentralized storage methods, introducing programmability, improving economic incentives, reducing costs, providing flexibility for dynamic data, and offering a general-purpose blob storage system.

Representing Walrus-stored blobs as objects on Sui allows developers to use Move smart contracts to interact with and manage their data in novel ways, like automating blob lifecycle management, developing dynamic interactions between onchain and offchain data, and allowing for onchain data verification.

The blob lifecycle on Walrus is at the heart of these benefits, ensuring that every step of the process is optimized for decentralization, resilience, availability, and flexibility.

Learn about Walrus and check out the Walrus documentation to start building with Walrus today! Explore Awesome Walrus repo for a curated list of developer tools and infrastructure projects in the Walrus ecosystem.

Note: This content is for general educational and informational purposes only and should not be construed or relied upon as an endorsement or recommendation to buy, sell, or hold any asset, investment or financial product and does not constitute financial, legal, or tax advice.