Bad data costs billions. Verifiability is the answer.

Verifiable data is the missing piece in AI, advertising, and beyond.

The biggest threat to AI projects isn't what most people think. It's not computing power, talent, or poorly written algorithms. It's bad data. And when that data is flawed, the consequences ripple beyond AI and into advertising, finance, healthcare, and every other system that relies on information it can't verify.

A shocking 87% of AI projects fail before reaching production due to data quality problems. For a $200B industry, that’s a massive problem. Digital advertising loses nearly one-third of its $750 billion in annual spend to fraud and inefficiency, largely because transaction data can't be verified. Even Amazon isn’t exempt from the challenges of bad data - after spending years developing an AI recruiting tool, they had to scrap the whole project due to bias in the training data.

In an era where these systems are critical infrastructure, we can’t treat data quality as an afterthought. When an AI model makes a decision or an ad campaign goes live, there's often no way to prove the underlying data is accurate. But there’s a better way forward.

When Bad Data Gets Behind the Wheel

The more critical the task, the more essential good data becomes. Most of us would love to try a self-driving car, but very few of us would get one trained on data from the worst driver we know.

The problem isn’t always a poorly designed algorithm: it’s what the system is learning from. Feed AI systems biased, inaccurate, or corrupted data, and they’ll amplify those flaws at scale. Amazon's recruiting system didn't choose to discriminate against women; it learned from male-dominated hiring data and replicated that bias. Even the most well-designed algorithm couldn’t overcome that.

The challenge goes even deeper than bad data. Training datasets are collected and prepared without verifiable trails of their origins, modifications, or completeness. When an AI system makes a decision (approving a loan, diagnosing a condition, recommending a hire) there's often no way to verify the quality or provenance of the data that trained it. This makes AI fundamentally untrustworthy for most use cases where a human would usually make decisions.

Walrus and the Sui Stack

There’s a lot of talk about building bigger, faster chips and more data centers. But building reliable AI requires more than just more and faster computing - it needs data you can verify.

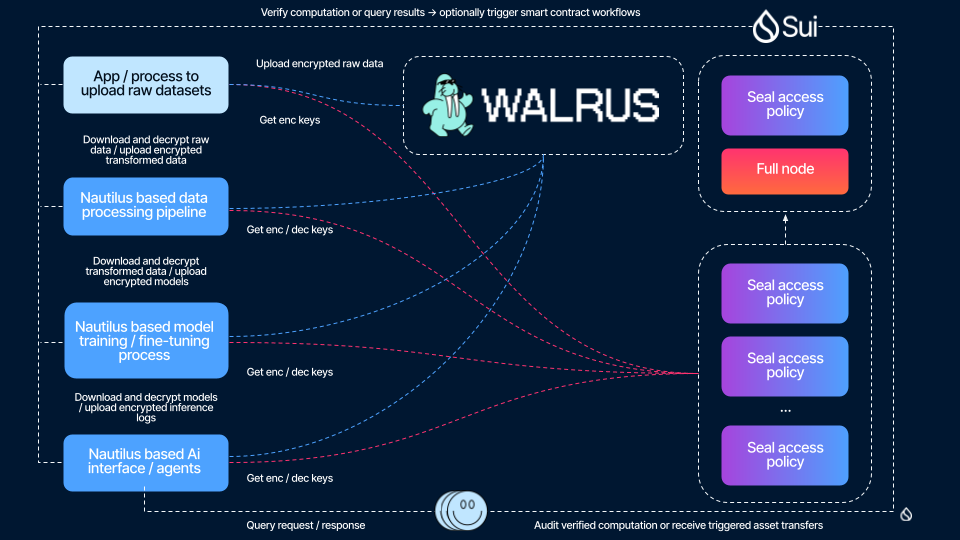

Walrus enables data verification from the ground up. Every file gets a verifiable ID, every change is tracked, and you can easily prove where your data came from and what happened to it. When regulators ask about your fraud detection model's decision, you can pull up the blob ID (a unique identifier generated from the data itself), show them the Sui object tracking its storage history, and prove cryptographically that the training data hasn't been altered.

Walrus works with the Sui Stack for coordinating programs onchain, helping ensure data is trustworthy, secure and verifiable from the ground up.

Verifiable Data in Action: How Alkimi Fixes Adtech’s Data Problem

Digital advertising is another industry suffering the consequences of bad data. Advertisers pour money into a $750 billion market, but face inaccurate reporting and rampant fraud. Transaction records are scattered across platforms, impressions could be from bots, and the systems measuring performance are the same ones profiting from it.

Walrus is helping Alkimi reshape the adtech industry into one advertisers can trust. Every ad impression, bid, and transaction gets stored on Walrus with a tamper-proof record. The platform offers encryption for sensitive client information and can process reconciliation with cryptographic proof of accuracy, making it ideal for use cases where data has to be

And AdTech is just the beginning. AI developers could eliminate training data bias by using datasets with cryptographically verified origins. DeFi protocols could tokenize verified data as collateral, the way AdFi turns proven ad revenue into programmable assets. Data markets could grow as organizations empower users to monetize their data while maintaining privacy.

This is all because the data can finally be proven instead of blindly trusted.

Bad Data Stops Here

Bad data has held back entire industries for too long. Until we can trust our data, we can’t make any real progress towards the innovations we’re hoping the 21st century will bring us - everything from AI to more trustworthy DeFi systems that prevent fraud and lock out bad actors in real time.

Walrus forms the foundation of that trust layer, helping companies build more reliable systems from the ground up. By building on a platform that empowers verifiable data, developers can trust from day one that their data tells a full, unbiased story.